Preseason Polls: Who Tends to be Overrated and Underrated?

Posted by nvr1983 on October 20th, 2011With the the release of today’s ESPN/USA Today preseason poll and next week’s Associated Press preseason poll college basketball fans across the country can go into full-fledged sniping mode at where their favorite team is ranked or where a rival is ranked (that is unless you are North Carolina in which case your only complaint is that you did not get every single #1 vote). We thought it would be interesting to take a look at the historical trends of how teams perform throughout the regular season as compared to where the selected media and coaches rank those teams coming into the season.

Obviously, there are some limitations here like the fact that we are basing this off regular season results and ignoring postseason success and that we are relying on the opinions of those coaches and writers to determine how successful a team is. On the first issue, we will agree that most college basketball fans (casual and otherwise) will probably remember a team’s success based on their performance in March rather than the overall body of work. We like to think that we are a bit more nuanced in our approach to basketball and think that a team’s overall performance is more than just six games in March. Consequently, we feel that their regular-season performance is probably a better indicator of how good they were. If you feel strongly the other way, leave your reasoning in the comment section and we may reconsider our view. The second issue is more legitimate and we seriously considered using the end-of-season KenPom.com rankings as the basis for overall performance, but in the end we decided to compare apples-to-apples and include coach/media bias in the preseason and end-of-season rankings. Of course, we may go back and do this exercise with the Pomeroy rankings in the near future.

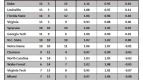

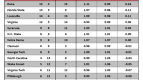

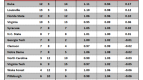

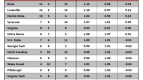

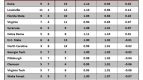

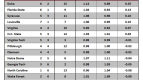

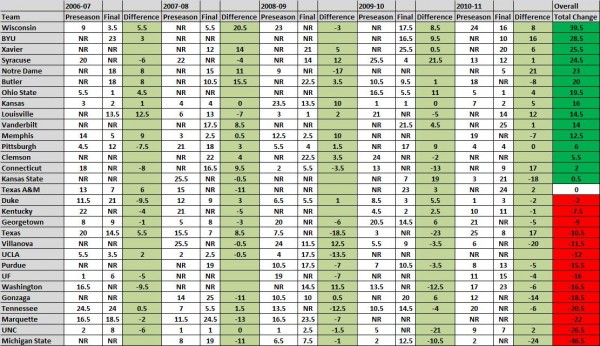

As for our actual analysis, we decided to look at the past five years and compare how teams did with their combined AP and ESPN/USA Today preseason rankings versus their combined ranking in those two polls at the end of the regular season (taken from here). Although there were nearly 80 programs that were ranked in one or both of the polls at either the beginning or end of the regular season, only 30 programs managed to make it at either the beginning or end of the season rankings in three or more seasons. For our combined ranking, we just took the mean of the two polls. In the event that a team was not ranked we gave them the #26 ranking, which only applied in the event that they were ranked in one poll, but not the other or if they were ranked in either the beginning or end of the season, but not the other part of the season.

There are a couple of interesting trends here. First off there are 15 teams that exceeded their preseason ranking on average, one that exactly matched it, and 15 that fell short of their preseason ranking on average. Looking a little deeper (don’t worry, we aren’t going to go John Ezekowitz on you), there are nine teams who only had a single-digit aggregate change in their ranking over the five-year period, which seems to be insignificant to us. Now some of those teams, like Kansas State (+19 in 2009-10 and -18 in 2010-11) or Connecticut (-13 in 2009-10 and +17 in 2010-11) have a high variance, but most schools tend to follow a similar trend. Despite claims of favoritism from many fans, Duke tends to perform pretty close to how they are ranked in the preseason. The same cannot be said for their Tobacco Road rivals UNC, who despite four appearances in the Elite Eight in the past five years, have underperfomed by this metric although that is due almost entirely to their 2009-10 season where they failed to make the NCAA Tournament. The other prominent East Coast power Kentucky tends to perform close to what their preseason ranking would suggest that they are who we thought they were even during the Billy Gillispie years when the media and coaches knew the team wasn’t going to be good. The other traditional powers, Kansas and UCLA, are similar to UNC in that they tend to perform to their preseason ranking with the exception of one season (2008-09 in this case where Kansas exceeded and UCLA underperformed their preseason ranking).

Outside of the traditional powers, there are quite a few other interesting teams. For example, the teams on the top and bottom of our rankings both come from the Big Ten with Wisconsin outperforming and Michigan State underperforming their preseason ranking the most. Most college basketball fans can understand the Badgers being there with how low expectations are for them coming into the season and how they seem to always surprise people as the season progresses. Michigan State is a little more confusing because most people associate Tom Izzo with well-coached teams that tend to exceed expectations. In this case, this underperformance may reflect the methodology and the bias in ranking Izzo teams, which tend to get the benefit of the doubt early in the season, but as the mid-season losses pile up the voters bail on the team before Izzo turns them around for a NCAA Tournament run, which isn’t reflected in these rankings.

A more interesting observation may be in the Cinderellas of the college basketball world where their performances are widely divergent. Butler, BYU, and Xavier (bear with me Musketeer fans on the “Cinderella” description) all rank near the top in exceeding their preseason ranking while Gonzaga, the archetypal modern-day Cinderella, is near the bottom of the list. There are probably a myriad of reasons for Gonzaga’s underperformance including that most people no longer even consider them to be a Cinderella and consequently they get treated as such in preseason polls so they don’t have as much room to move up in the polls as the season progresses although this doesn’t seem to affect many other teams that are traditionally highly ranked.

Our final observation is the biggest intra-season changes in team rankings during the five-year period. Not happy with where your team is ranked? There is no need to worry because those rankings can change quite a bit (and the fact that unlike college football you can still win the national championship even if you are not in the top two at the end of the regular season). If you are still concerned, just look to Syracuse, who rose 21.5 spots in 2009-10, and Notre Dame, who rose 21 spots last season. On the other hand, if your team is ranked highly don’t get too comfortable as that can change in a hurry too. Just ask fans of Michigan State (dropped from #2 to out of the polls last year) and Texas (dropped from #3 to out of the polls in 2009-10).

I love Butler, but your math on them does not add up. In 2010-11 they went from preseason 18 to Final NR. That should be a negative 8. You show it as a positive 8.

Rory

Indianapolis

I think an interesting correlation might be comparing tournament performance in the previous year to accuracy in the preseason poll in the next. The best measure for this would be Pete Tiernan’s PASE. It works perfectly for the hypothesis. The thinking is that a team who goes far despite having been a mediocre team all year, will be overrated in the next year’s poll. A perfect example of this is Michigan St. from 2009-10 to 2010-11. They made a great run to the Final Four in ’10, but they were a low seed, and barely beat everyone on the way there, while not beating a team seeded higher than 4th. Based largely off that run, they were ranked 2nd, but ended up playing more like the 5 seed that lost some pieces. On the opposite end, you have teams like Wisconsin and BYU, who are known for coming up a bit short in the tourney and have poor PASE numbers. Consequently, they are underrated at the start of almost every season. After a cursory check, there is a big mix on whether it fits teams or not (Marquette and Xavier are two big exceptions), but 5 years is a pretty small sample size. I’d bet if this were expanded for the entire 64 team era (as far back as PASE stats go), you might see better correlations…or not, but it would be interesting to know.

i really like this concept but i think that the sample size of 5 years is to small. 1 down year can completely skew these results. Use UNC for an example, throw out their 09-10 season and they are -5.5 in 4 out of the 5 years, that shows they are being rated nearly spot on year in year out.