On Improving the NCAA Tourney: Part I

Posted by Brad Jenkins (@bradjenk) on October 27th, 2016In June the National Association of Basketball Coaches (NABC) empaneled an ad hoc committee whose stated purpose was to provide the NCAA Division I Men’s Basketball Selection Committee the perspective of men’s basketball coaches and their teams regarding selection, seeding and bracketing for the NCAA Tournament. With that in mind, we decided to give some specific recommendations of our own that would enhance an already great event. Let’s first focus on some improvements for the selection and seeding process.

John Calipari is one of the members of the recently created NABC ad hoc committee formed to make recommendations to the NCAA Selection Committee. (Kevin Jairaj/USA TODAY Sports)

Bye Bye, RPI

Whenever the subject arises of improving the ratings system that the Selection Committee uses, there is one recurring response: either dump the RPI, or, at a minimum, dramatically limit its influence. The good news is that we may finally be headed in that direction. About a month after the NABC formed its committee and began communicating with the NCAA, the following statement was made as part of an update regarding the current NCAA Selection Committee:

The basketball committee supported in concept revising the current ranking system utilized in the selection and seeding process, and will work collaboratively with select members of the NABC ad hoc group to study a potentially more effective composite ranking system for possible implementation no earlier than the 2017-18 season.

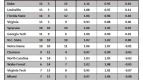

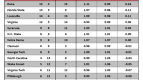

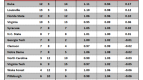

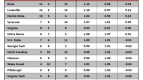

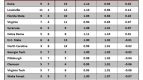

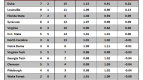

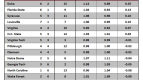

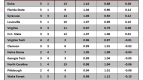

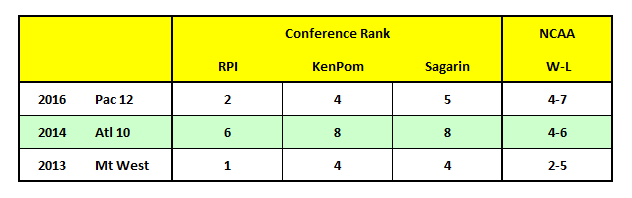

Moving away from the RPI as the primary method for sorting teams into tiers would be a huge step toward improving the balance of the field. We have heard committee members for years make it a point to mention that a school’s RPI ranking is just one factor of many when analyzing its resume. But then the same committee members turn right around and cite a team’s record against the top-50 or top-100, or its strength of schedule rating, all of which, of course, are derived from the RPI. That means that the old outdated metric is still a highly significant influence on how teams are judged. The real harm within the current system is that the RPI is so interconnected that entire conferences are frequently overrated, which leads to all of their selections being over-seeded by the committee. Placing five to seven teams well above their proper seed lines can have a substantial negative impact on the overall balance and fairness of the entire NCAA Tournament. Here are some recent examples:

In each of the above scenarios, the conference’s RPI rating — and therefore the rating of all its members — was considerably higher than the rankings of the two most respected metrics in the business: Ken Pomeroy and Jeff Sagarin. When the committee seeded schools from these three conferences, it was clear that they believed the RPI’s overrated team evaluations, placing theeir teams several slots higher in the bracket than they most likely deserved. And probably not coincidentally, teams from those three leagues very much underperformed in the NCAA Tournament in those three years. In fact, that lousy 4-7 performance from the Pac-12 last year was achieved entirely against lower-seeded opponents. This isn’t to say that the Pac-12 wasn’t a good conference in 2015-16, but it was obvious to nearly any observer that it really didn’t have seven of the nation’s top 32 teams (which was how it was seeded).

Location, Location, Location

Switching to a straight-up composite ranking derived from multiple metrics, however, won’t completely fix things. We would like to see the committee take another step and incorporate game location when evaluating teams for both selection and seeding. Currently, a school’s win-loss record in road and/or neutral games is all we hear about when a team’s performance away from home is evaluated, but that fails to tell us how strong the opponents were in those particular games. The solution here is to come up with a simple way to adjust how we rate a team’s schedule based on both factors — quality of the opponent, and location of the game. According to KenPom, beating the 90th-best team on the road is about equally as difficult as defeating the 50th-best team at a neutral site or the 20th-best team at home. So let’s just come up with an arbitrary number — maybe 25 — that we add or subtract to an opponent’s rating to determine the true difficulty of each game. As an example, if a school plays the 40th-rated team at home, that game becomes adjusted to a difficulty rating of 65. KenPom does something similar on his site as he gives an “A” rating to a game played against a Top 25 team after the contest is adjusted for location.

Was Wayne Tinkle’s Oregon State squad a little overrated last year? Probably. (Godofredo Vasquez/USA Today)

To see how much this could impact things, just look at Oregon State’s schedule from last year. Using the RPI system as its sorting tool, the committee saw that Oregon State had compiled a record of 4-8 versus the top 25 teams. Had they instead used KenPom’s adjusted top-25 — clearly a more accurate assessment of schedule strength — instead of the RPI, the Beavers’ record would have been a much less impressive 1-8, and it almost assuredly would have lowered Oregon State’s #7 seeding by the committee.

Two More For Consideration

The committee up until several years ago valued teams’ recent performance more than an overall season’s results. We would like to see a return to that protocol but it should be evaluated in a different way. Rather than simply considering schools’ win-loss records in their last 10 games, the committee must find a way to equalize the metric. At the end of a season, conference tournament play can create extra contests for some teams but not others, thereby removing data points from five weeks ago for the teams that play more games. We’d instead like to see the committee start with a fixed date of review (maybe February 1) and then consider performance adjusted for game location in the same way described above. The logic for putting slightly more emphasis on recent play is simple: Teams develop differently over the course of the season (some get better; still others get worse), but it is the March version of that team that will be competing in the Big Dance.

One last suggestion for the committee is to change the policy of not counting games played against non-Division I schools when evaluating teams. The stated intent is to not reward teams for beating up on those non-DI schools, but by not counting the game the opposite is actually happening. Total number of wins is no longer a major factor in the committee’s decision-making process, but non-conference strength of schedule most certainly is. Right now, if a team plays a Division II or NAIA school as its patsy game instead of a very weak Division I squad, its overall schedule strength is not negatively affected. It would be much fairer to give all non-Division I opponents a rating equal to the lowest Division I program (#351), and thereby appropriately account for scheduling such games.

Next week in Part Two we will look at some possible improvements to the NCAA Tournament’s bracketing process.